Research evaluation in context 4: the practice of research evaluation

The Strategy Evaluation Protocol describes a forward-looking evaluation for which research organisations are responsible. Context, aims and strategy of units are key. Very timely and relevant, yet it is easier said than done.

Previous posts on the Strategy Evaluation Protocol 2021-2027 describe the criteria and aspects and the process and responsibilities. Asking to judge research units on their own merits and putting the responsibility for the evaluation with the research organisations proves quite a challenge. Evaluation might not always happen according to protocol, yet the process itself offers opportunities to reflect and define. And as such it has value.

This blogpost is based on my long-term involvement with the SEP as a member of the working group SEP; on information shared during formal committee meetings; inputs from participants at workshops and briefing sessions; discussions with representatives of units preparing an evaluation; unpublished as well as published reviews of the SEP; as well as self-evaluation and assessment reports available online. Plus, it is informed by the evaluation of my own research units (past and current). Also, the new SEP 2021-2027 has become effective this year, so there is not much practice yet. However, most of what is addressed in this post has been part of previous protocols.

Aims and strategy

Evaluation takes place in light of the aims and strategy of the unit. This is nothing new, it has been part of the protocol for almost 20 years now. The current protocol puts even more emphasis on it, with its changed name (from Standard to Strategy) and a separate appendix that explains “aims” and “strategy”.

The issue is the assumption that units have a clear strategy. This is often not the case. Even when it has one, it is not always shared and known, nor does it guide decisions and choices. Some units, such as my own, organise internal meetings to prepare for the evaluation. We jointly develop an understanding of the past and uncover, or maybe recover, our strategy. Other units reportedly use the moment not so much to look back, but to develop a joint vision for the future.

Having said that, in the past units described broad, common and vague goals, such as excellent, interdisciplinary, or international, or more recently “improve internationalisation, innovation and rigour in research” and “strengthen societal relevance, integrity, and diversity.”

Indicator? What indicator?!

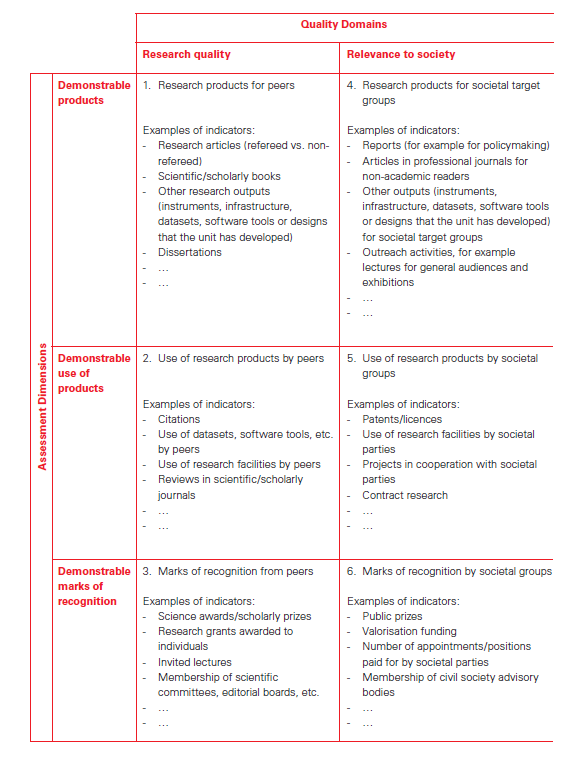

The 2015 and 2021 protocols only describe categories of evidence and mention exemplary indicators for each category. A unit should present and explain its own selection of indicators, that fit the unit’s context and strategy.

Some perceive the indicators as a given and mention they are unaware that those are just samples. This, although it is mentioned explicitly and repeatedly, for instance, six times in the table with categories of evidence in the 2015 protocol (Figure 1). On the other hand, this misperception might not come as a surprise, given the strict requirements of so many other evaluations.

Also, it is a huge challenge to define proper indicators for research quality and societal impact. At CWTS, we are quite aware of this. But most researchers are not experts in meaningful metrics; their expertise is Caribbean archeology, political economy or chemical biology. And yet they are asked to develop their own indicators. The nationwide initiative Quality and Relevance in the Humanities (QRIH) has taken action and developed sample indicators for SEP evaluations. It has identified indicators for all categories. Some are authorized by a panel; others have been used in a self-evaluation report.

Novel aspects

Over the years novel aspects that reflect developments and debates have become part of the protocol. They include research integrity, diversity and Open Science.

The necessity to address these issues is not always clear to the unit, nor to the committee. To illustrate this, one committee remarked that integrity is less a problem in the humanities than in the natural sciences, and even less so in their discipline, since research “seems inseparable from the individuality of whoever is doing it.” However, the 2015 protocol that was in use back then, specifically mentions “self-reflection on actions (including in the supervision of PhD candidates)” and “any dilemmas […] that have arisen and how the unit has dealt with them.” I don’t understand how that doesn’t apply.

Other times the protocol asks for more than a unit is allowed to provide. It is unlawful to register an employee’s ethnic or cultural background in the Netherlands, yet the protocol includes these dimensions in its definition of diversity. And while many are aware of the lack of ethnical and cultural diversity, there is discomfort addressing this, if only because of legal issues.

The relevance of societal relevance

Societal relevance, a subject that I have studied for some time now, proves challenging for units and committees. Sometimes the assessment focuses on structures and collaborations, i.e., on strategy; sometimes on products and results without any explanation for this emphasis; sometimes on societal issues that could benefit from the research, and sometimes on the inherent and potential relevance: “[the] research themes/specializations […] are explicitly relevant to society and the co-creation of public value.”

Nowadays the necessity of a separate criterion for societal relevance isn’t questioned anymore, yet the seemingly relative importance is. Which is odd, given that the three criteria (the other two are research quality and viability) are not weighed against each other. Societal relevance might be a substantive element of the mission of some units and it might be hardly of importance for others.

The quality of research quality

The protocols describe research quality only briefly. This leaves room for interpretation. Browsing through assessment reports, it is striking to see the many references to publications and publication strategies as a proxy for research quality and strategy. One committee observes a trend across an entire discipline “towards valuing quality over quantity” and that “researchers are no longer encouraged to publish as many articles as possible”. Yet the shift still relates to publications, since researchers “are stimulated to submit primarily top-quality papers in high impact journals.” Followed by a discussion of citation rates and interdisciplinary research.

National evaluation in context

There is a tension between a joint evaluation across a discipline and the intention to evaluate in context. The initial VSNU protocols of the 1990s1 prescribed a joint evaluation. One goal, abandoned since 2003, was to ascertain the scientific potential of a discipline. Yet the practice of joint evaluations still exists. It is partly rooted in the law that requires assessment “in collaboration as far as possible with other institutions.”

A joint evaluation requires representatives of a discipline, involving anywhere between 2-9 universities, to jointly take action, discuss and decide. Although unintended, this results in an extra practice of negotiating quality and relevance. It also requires one assessment committee only. One reason to abandon the mandatory joint evaluation was that it is hardly possible for one committee to do justice to each and every unit. And with the increased focus on the context of the unit, its mission, goals and strategy, this is even more so the case.

Between intention and practice

For this blogpost, I have browsed through reports and used my own involvement with the SEP. The result is a very sketchy picture of examples and instances that indicate a difference between intention and practice. It certainly is not a thorough analysis. And yes, there are evaluations where issues are addressed according to the protocol, with ample attention for argumentation and strategy. Also, I don’t want to condemn any practice, on the contrary. Rather I want to nuance the enthusiasm of some.

And we shouldn’t neglect what happens during an evaluation. It has repeatedly been reported that units use an evaluation to re-define and re-adjust their mission, goals and strategy. And even when an evaluation isn’t fully done according to protocol, those involved go through the motions, encounter obstacles, end up in discussions and ultimately negotiate and establish notions of quality and relevance. The execution and result of that process might not always be excellent, but we shouldn’t neglect the benefit of those efforts.

A new protocol will not change a community overnight. However, it urges boards, units and committees to address novel aspects and practices. More on that in the next blogpost.

1 VSNU (1993). Quality Assessment of Research – protocol 1993. Utrecht: VSNU. VSNU (1994). Quality Assessment of Research – protocol 1994. Utrecht: VSNU. VSNU (1998). “Protocol 1998”. In: Series Assessment of Research Quality. Utrecht: VSNU.

Header image by Ricardo Viana on Unsplash

0 Comments

Add a comment