Does science need heroes?

Does science need heroes or does it need to reform? Idolizing heroes can worsen bias, inequality, and competition in science. Yet, it does require good leadership to ignite structural change. This commentary for a Rijksmuseum Boerhaave symposium on prize cultures aims to address this paradox.

On the occasion of the 2023 Nobel Prize announcements, the Rijksmuseum Boerhaave symposium “Does science need heroes?” attempted to explore the evolution of prize cultures over time and examine the current and potential roles of prizes in contemporary scientific practices. I would like to thank Ad Maas, Curator of Modern Natural Sciences of Rijksmuseum Boerhaave and professor in Museological Aspects of the Natural Sciences at the Centre for the Arts in Society of Leiden University, for inviting me to give a comment on the second day of the symposium. This blog post is an adaptation of the talk I gave there.

Does science need heroes? Spoken like a proper academic, my answer to this question would be: yes and no.

Let me start with the latter.

No, the last thing contemporary science needs are heroes. What it does need is structural reform. Science suffers from major flaws, including various forms of bias, structural inequality, lack of transparency and rigor, excessive competition, commercialization, and vanity publishing. It is unhealthy for researchers to want to be a hero in the current system.

But also: yes! Science is in dire need of heroes! Because the science system needs to reform, we need different types of people who can lead the way and set an example with good leadership. This is what I want to address in this commentary.

But first, and inspired by Museum Boerhaave's wonderful collection, I will go back to the 19th century, when the first university ranking was conceived. People often think the first ranking appeared around the 2000s, with the publication of the first commercial ranking. But that is not true. As is more often the case, problematic metrics for assessing excellence are in fact invented by scientists themselves!

A few years ago, I examined the work of the American psychologist James McKeen Cattell with two of my colleagues. Cattell lived from 1860 to 1944, served as the long-time editor of the journal Science, and his primary academic interest was the study of 'eminence', a somewhat ambiguous concept that I translate here as excellence, eminence, or greatness. There is an interesting connection between Cattell's work on 'eminence' as the primary 'virtue' of individual researchers and the contemporary university rankings of the most prestigious, high-performing, Nobel Prize-winning universities with the most flourishing research and best teaching environments.

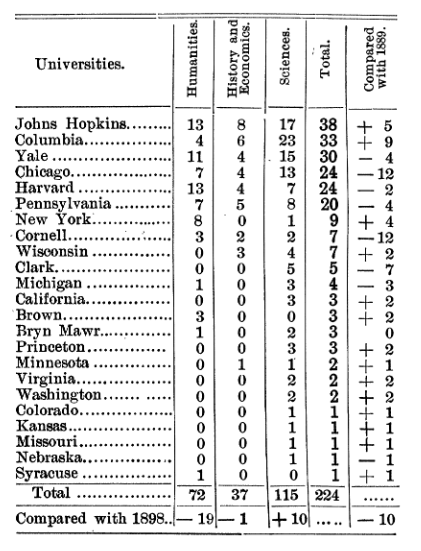

Cattell studied in England and later held an office in Cambridge, where he eventually came into contact with proponents of eugenics. Cattell, and many other contemporaries interested in 'eminence' or greatness, observed a decrease in the number of 'great men' compared to earlier periods, which they found concerning. They feared the biological degeneration of the population, and this fear was exacerbated by a more general concern about the decline of the British Empire. This fear was further intensified by the rise of industrialization, democratization, and a growing working class. The preoccupation with 'great men' was also reflected in a growing interest in the role of the scientist in a time when scientific work increasingly became a 'profession' among other professions. The scientific method of eugenics gave Cattell the tools to classify 'eminence' in a new way. His idea was that professors could be placed on a single ranked list based on peer review. He asked a large number of scientists to create a list, ranking scientists from the most to the least prominent, based on a selection of names provided in advance. High "output" was insufficient as an indicator of 'eminence'; it was primarily about reputation among peers. What exactly this reputation was based on remained ambiguous. Cattell kept various background and biographical information about the men on the lists, including data on birthplace, residence, age, and especially the university where researchers had received their education. But his lists also quickly started to serve other purposes: he mapped how 'scientific eminence' was distributed across cities and universities. And I quote from a piece in 1903: "We can tell whether the average scientific standard in one part of the country or at a certain university is higher or lower than elsewhere; we can also quantitatively map the scientific strength of a university or department." So, initially, Cattell's lists were not compiled with the purpose of comparing institutions, but the information these instruments provided was indeed used for these comparisons.

Cattell's study of scientific men and his subsequent ranking of universities was deeply rooted in a larger debate about the role of the scientist. The ideal image of a researcher was evolving significantly at the end of the 19th and the beginning of the 20th century, as I think is happening again today. But back then, older ideas about the gentleman-scholar, driven by intrinsic motivation, were increasingly juxtaposed with ideals that were more in line with the concept of science as a paid profession. Just as the current preoccupation with excellence and ranking is related to a much broader discussion about what universities are exactly for today!

Over the past 30+ years the Dutch and European governments have introduced several different policy instruments to foster excellent research. These policy instruments worked by stimulating competition in science, and what I find very interesting is how these research policy instruments have in the past decades started to shape our perception of excellent science through an interactive feedback loop between policy on the one hand and the practice of doing research on the other. An excellent researcher’s standing is now primarily determined by their research production. This is partly still science as a profession that Cattell and others instigated, but this profession is now saturated with key performance indicators (like a lot of other professions in neoliberal democratic societies). The primary tactical and strategic aims have become competing for (ever decreasing amounts of) research income, and publishing in prestigious journals, and aiming for a Nobel Prize. Put even stronger, prizes and awards have become important parts of academic CVs. And because of gender and other diversity gaps, this makes it harder for women and other minority groups to make a career in science. University rankings use prizes and awards as input, and this trickles down in university policies. Universities over the past decades have started to think in terms of globalised economic markets for excellent researchers.

The motivation behind policies for excellence perhaps at one point made sense, and Dutch science is doing well by the numbers, but obviously, and this is not often acknowledged, there is a big structural issue with the way we measure excellence. If we are truly honest: current notions of excellence and metrics-driven assessment criteria are threatening to shape the content of the work and the diversity of the subject matter. We are observing that research is designed and adjusted in a way that ensures a good score. We see that people are choosing research questions not just based on interest or importance, but also to improve their chances of getting a job. This ‘thinking with indicators’ is obviously problematic for science when important research questions are not being addressed and even become ‘unthinkable’.

This type of thinking also has consequences for the space in academia for what you could call ‘social’ elements. Think of decreasing collegiality, less commitment to the community, or bad leadership. And if we're mostly noticing and applauding the 'big names,' those veteran academics with a significant reputation, we are hurting the opportunities for talented newcomers and other team members who play crucial roles. What worries me is that the pressure in many places is so high that ambitious young researchers think they cannot go on holiday because otherwise their work will be ‘scooped’. Competitiveness can create feelings of constant failure among early career researchers, as they may never feel they meet the required standards. And even those who are at the top, and we have interviewed many, often feel they must continue to work ever harder to keep out-competing others. Group leaders and heads of department warn each other about the health risks that they run by chronically working very hard, year after year. This is an actual quote from an actual interview:

The example these senior researchers are setting with that behaviour to their younger colleagues is that it is heroic to work eighty hours a week, and that if people can’t keep up, they are not fit for the Champions League. These senior researchers have really internalised that this is what it takes to play the game, and that if you can’t stand the heat, you should get out of the kitchen. But let’s face it, that kitchen is on fire.

For one, we have globally had several severe integrity and reproducibility crises. We have seen the shadow side of competition, where researchers focus too much on finding new things and trying to do that quickly, even if that means that they might not be doing high-quality research. Of course, we all want to tell our students to dream big and ideally, we also provide them with the protected space to develop their own research and strive for the highest quality. However, if in the meantime we also encourage them to focus on chasing after groundbreaking results and judge success mainly by how many times our work gets cited by others, we create a culture where short-termism and risk aversion thrive. This culture is today also driven by a pressure to secure funding in competitive four-year cycles. This, in turn, makes it very hard for researchers to do long-term, risky projects, or work in fields that have a big impact on society but don't get cited as much, like applied sciences or social sciences. As a result, research agendas don't always focus on what society really needs. Also, all this competition can make scientists less likely to collaborate, which is crucial for solving complex problems.

To make science better, we need to change our priorities, and support a wider range of research. We very urgently need more diverse perspectives and inclusive participation in science. We need to collaborate and open up, simply because what is expected of science today greatly exceeds the problem-solving capacity of science alone. And that is why we also really need to rethink the criteria for excellent research. These criteria need to be more inclusive, because research evaluation has the power to affect the culture of research; individual career trajectories and researchers’ well-being; the quality of evidence informing policy making; and importantly, the priorities in research and research funding.

I would also like to address a misunderstanding about the Dutch ‘recognition and rewards’ program. I often hear a concern that the program wants to wishy-washily include everyone and forgets about upholding excellence. I do not think that this is a fair portrayal of the program. At its core, the program is about an honest look in the mirror, and about fixing what is broken. The program is part of a much larger set of national, regional, and global initiatives including the DORA Declaration and the Leiden Manifesto for research metrics, the Manifesto for Reproducible Science from 2017, FOLEC-CLASCO’s 2020 report on reforming research assessment in Latin America and the Caribbean, Europe’s CoARA, The Future of Research Evaluation synthesis paper by the International Science Council, The Inter Academy Partnership, and the Global Young Academy, and the global UNESCO Recommendation on Open Science.

All these initiatives are part of a global effort to re-think evaluation. And we need other types of leaders and heroes in academia, who will work together to implement responsible forms of research assessment. We should stop sending each other the message that regardless of how hard we all work, there is always more we need to do to succeed. If we want our work to be meaningful, we need to invest more in building strong academic communities that value collaboration and share a commitment to advancing research and teaching, while making a positive impact on and with society.

One of my heroes in life is Pippi Langström, or Pippie Langkous in Dutch. I used to watch her show when I was little. Pippi has this saying: “I have never tried this before, so I think I should definitely be able to do that.” I love it. And I believe that we need to reward this type of behaviour more than we currently do in academia, sadly. We should foster taking risks, trying something new, stepping out of our comfort zone, and being bold whilst not taking ourselves too seriously. But in fact, we see the opposite happen among early career researchers. Many of them suffer from anxiety and an unhealthy pressure to conform to a problematic norm. Helping to change that was one of my drivers to be part of this symposium, on a Saturday afternoon.

Header photo: Adam Baker

0 Comments

Add a comment